LumiGAN: Unconditional Generation of Relightable 3D Human Faces

3DV 2024, Spotlight

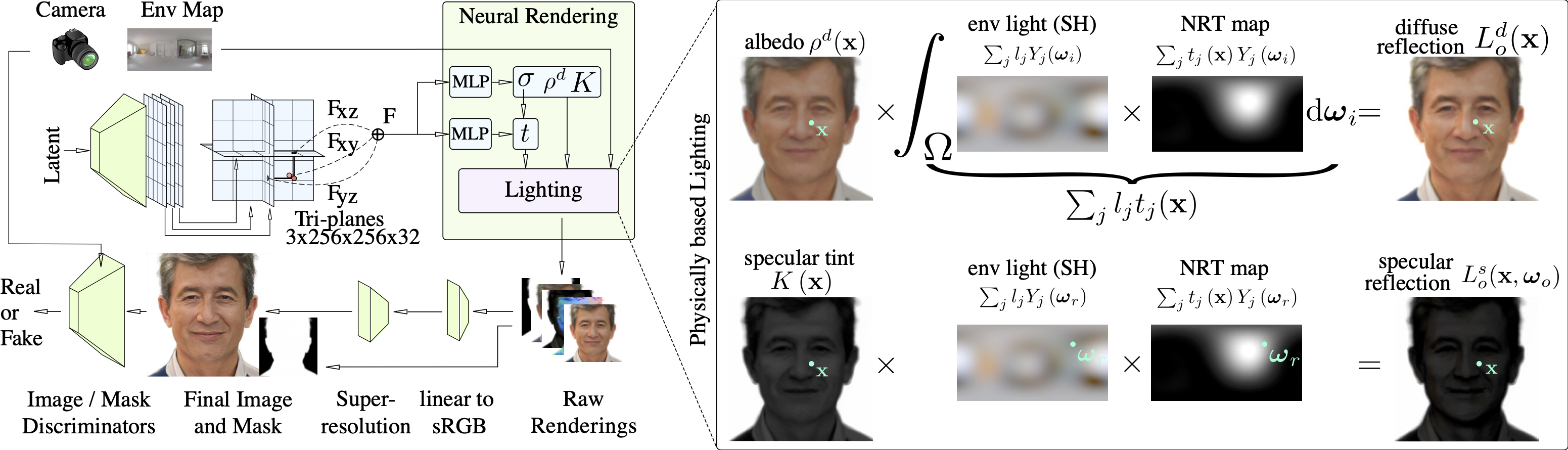

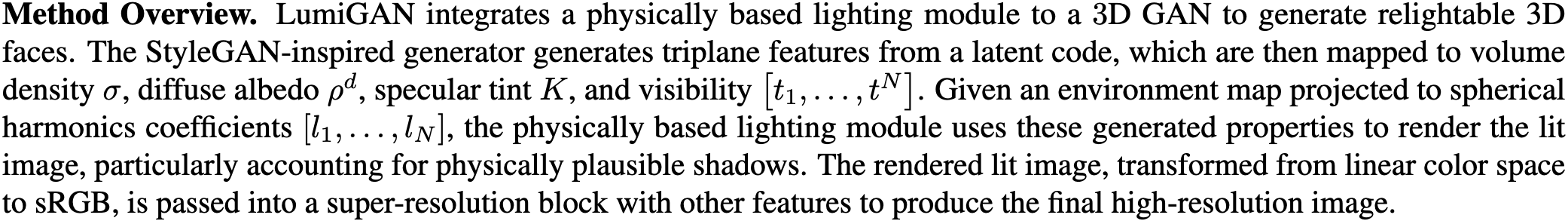

Abstract: Unsupervised learning of 3D human faces from unstructured 2D image data is an active research area. While recent works have achieved an impressive level of photorealism, they commonly lack control of lighting, which prevents the generated assets from being deployed in novel environments. To this end, we introduce LumiGAN, an unconditional Generative Adversarial Network (GAN) for 3D human faces with a physically based lighting module that enables relighting under novel illumination at inference time. Unlike prior work, LumiGAN can create realistic shadow effects using an efficient visibility formulation that is learned in a self-supervised manner. LumiGAN generates plausible physical properties for relightable faces, including surface normals, diffuse albedo, and specular tint without any ground truth data. In addition to relightability, we demonstrate significantly improved geometry generation compared to state-of-the-art non-relightable 3D GANs and notably better photorealism than existing relightable GANs.

LumiGAN can generated photorealistic 3D human faces that can be rendered under any illumination conditions. Compared to prior works, we support environtment lighting and account for physically plausible shadows on the face.

Thanks to the physically-based lighting module of LumiGAN, the generated face geometry such as face normals are involved in radiance computations. Consequently, the normals are refined and consistent with the rendering.

Instead of generating RGB colors, LumiGAN learns to generate several components, including albedo, visibility as radiance transfer, and normals via 3D shape, which are used by our physically based lighting module to compute the final colors. This framework supports view-dependent effects and allows for post-generation relighting with arbitrary environment maps.

Given any portrait image, we can apply GAN inversion to LumiGAN to get the relightable 3D representation of the person and re-render them under novel illuminations and viewpoints.

Being a generative model by definition, LumiGAN's latent code space can be smoothly interpolated, under aribituary illumination conditions and from any viewpoints.

@inproceedings{deng2024lumigan,

title={Lumigan: Unconditional generation of relightable 3d human faces},

author={Deng, Boyang and Wang, Yifan and Wetzstein, Gordon},

booktitle={2024 International Conference on 3D Vision (3DV)},

pages={302--312},

year={2024},

organization={IEEE}

}

Acknowledgements: We thank Eric R. Chan for constructive discussions on training EG3D. We thank Alex W. Bergman, Manu Gopakumar, Suyeon Choi, Brian Chao, Ryan Po, and other members from the Stanford Computational Imaging Lab for helping on the computational resources and infrastructures. This project was in part supported by Samsung and Stanford HAI. Boyang Deng is supported by a Meta PhD Research Fellowship. Yifan Wang is supported by a Swiss Postdoc.Mobility Fellowship. We borrowed the webpage template here.